Thompson Sampling vs Pure Exploration Thompson Sampling

You are given \(N\) coins which may be biased. Whenever you toss a coin and get a Heads, you score a point. Now consider these two objectives:

- Maximize the total points scored.

- Find the coin which has the highest chance of landing Heads, a.k.a the best coin.

If you know the best coin, you can keep tossing this coin and thereby get the best score. But, the problem is that you don’t know the biases of these coins. Although these objectives are intimately related, they are very different.

This post is inspired from some of my recent work at XRCI. The set of \(N\) coins is equivalent to a Bernoulli Multi Armed Bandits (MAB), which I talked about in my previous blog post. There could be two different of objectives in MABs.

The first one, and probably the most extensively studied one is the objective of maximizing the cumulative reward or minimizing the regret incurred. This is the same as the first objective in our coin tossing problem. There are several provably optimal algorithms for this objective, but my favourite one is Thompson Sampling.

The second objective is to find the best arm. There exist two scenarios here:

- Fixed Budget: Find the best arm within a fixed number of arm pulls.

- Fixed Confidence: Find the best arm with a fixed high confidence in as few arm pulls as possible.

These can be termed as “Pure Exploration” problems, as the objective is to output one arm and not to maximize the rewards.

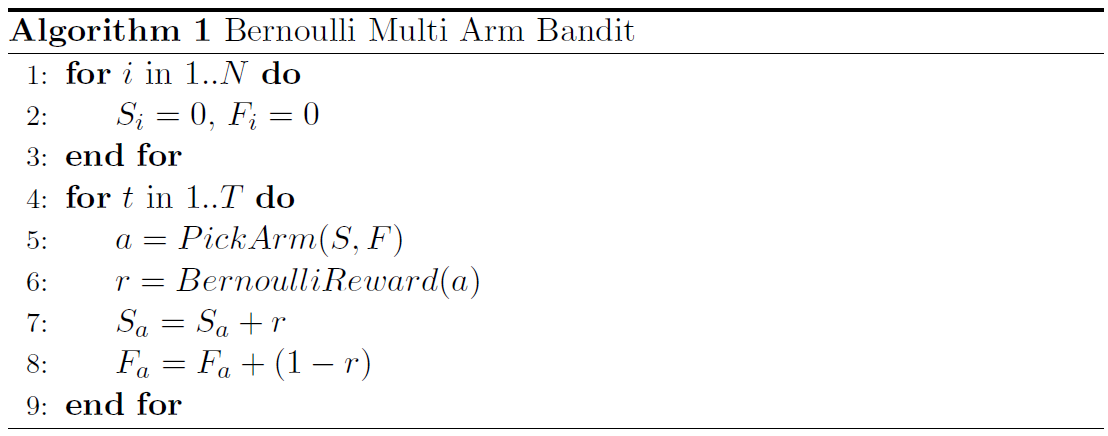

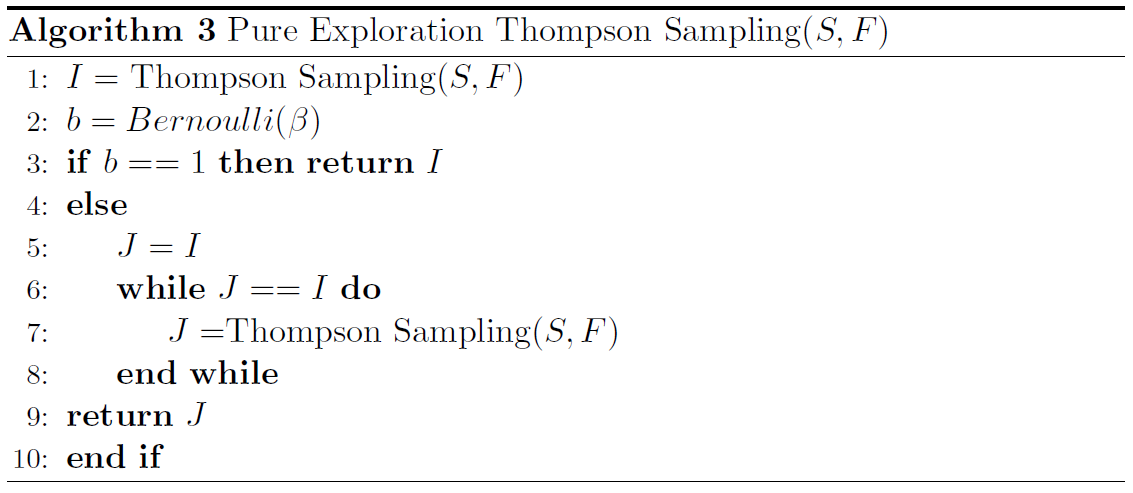

Now I present Bayesian algorithms for maximizing the rewards and for finding the best arm in the fixed confidence setting. They work by maintaining a prior distribution on the mean of each arm. As we are dealing with coins here, the rewards of the arms are drawn from a Bernoulli distribution. Form my previous post on Conjugate Priors, we know that a Beta distribution is the conjugate prior for a Bernoulli likelihood. Hence, we use a uniform Beta prior for each arm. Let the \(N\) arms(coins) be numbered from \(1\) to \(N\). Let the mean of arm \(i\)( probability of heads for coin \(i\) ) be \(\mu_i\). Let \(S_i\) denote the number of times arm \(i\) gave a reward \(1\) (coin \(i\) came up Heads) and \(F_i\) denote the number of times it gave a reward \(0\) (came up Tails). The procedure for picking arms and posterior updates is as follows:

Thompson Sampling (TS)

TS is a randomized Bayesian algorithm for maximizing the cumulative rewards collected. At each step, it samples a bandit instance from, the current posterior, i.e., samples a mean value for each of its arms from the current posterior. It then pulls the arm with the highest sampled mean. It updates the posterior of this arm according to the reward it receives. The TS algorithm for choosing arms is as follows:

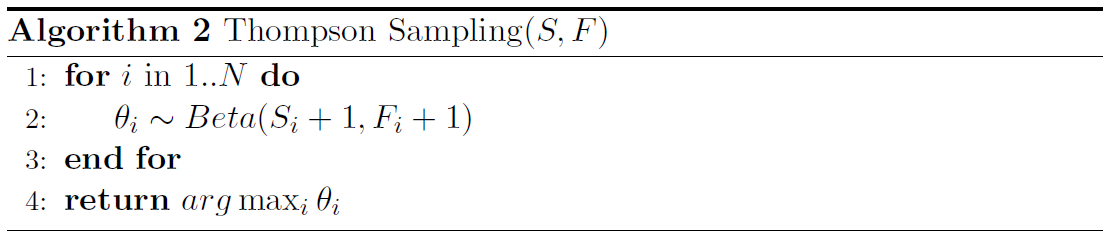

Pure Exploration Thompson Sampling (PTS)

TS cannot be used for pure exploration as it pulls the estimated best arm too often. This prevents it from refining its confidence on the optimal arm. PTS modifies TS by adding a resampling step. This prevents it from pulling the estimated best arm too often. With probability \(\beta\) (usually set to \(1/2\)) it pulls the estimated best arm. With probability \(1-\beta\) it pulls another arm. The PTS algorithm for choosing arms is as follows:

Calculating confidence

The confidence of an arm, denoted by \(\alpha_i\) is the probability of it being the optimal arm. We can calculate this value using the posterior distributions as follows:

\[\begin{align} \alpha_i &=\Pr(\theta_i\ge \theta_j, \text{ where } \theta_j \sim Beta(S_j+1,F_j+1))\\ &=\int_{x=0}^{1}\Pr(x \leq \theta_i < x+dx) \prod_{j\neq i}\Pr(\theta_j \leq x)\\ &=\int_{x=0}^{1} \big[Beta_{PDF}(S_i+1,F_i+1,x)\prod_{j\neq i}Beta_{CDF}(S_j+1,F_j+1,x)\big] dx\\ \end{align}\]Here, \(Beta_{PDF}\) is the probability density function and \(Beta_{CDF}\) cumulative density function of the Beta distribution.

For PTS, it has been proven that the confidence of the optimal arm converges to \(1\) at an exponential rate, with the rate of convergence being asymptotically optimal. Like TS, I believe PTS can also be extended for Pure exploration in other settings. My work at XRCI was on an algorithm for pure exploration in MDPs.

References:

- For an overview of the results on Thompson Sampling: Chapter 3 in Bayesian Reinforcement Learning: A Survey

- Pure Exploration Thompson Sampling: Simple Bayesian Algorithms for Best Arm Identification